Abstracto

Throughout this analysis, I analyzed the IBM Employee Attrition dataset to explore the main causes of dropout in a company. First, through an exploratory analysis, I made sure the data was clean.

At this stage, I realized that the data had an imbalance problem between the classes of the dependent variable, to fix this problem I have expanded the sample of the lower class.

Once the data was ready, I started modelling the decision tree, bagging, random forest, gradient boosting machine (GBM) and extreme gradient boosting machine (XGBM). For each technique, my workflow has been as follows:

- Hyperparameters tuning of each model, with the

trainfunction of thecaretpackage, using a loop when the function does not allow control of some parameters and using parallel computing over three processors cores.

library(doParallel)

library(tictoc)

registerDoParallel(makeCluster(3) -> cpu)

nodesize_ <- c()

sampsize_ <- c()

Accuracy <- c()

Kappa <- c()

Auc <- c()

tic()

for (nodesize in c(20,40,60,80,100)) {

for (sampsize in c(200,500,800,1200,1570)) {

bg <- train(data=upTrain,

factor(attrition)~.,

method="rf", trControl= control,

#fijar mtry for bagging

tuneGrid= expand.grid(mtry=c(51)),

ntree = 5000,

sampsize = sampsize,

nodesize = nodesize,

#muestras con reemplazamiento

replace = TRUE,

linout = FALSE)

confusionMatrix <- confusionMatrix(

bg$pred$pred, bg$pred$obs)

roc <- roc(response = bg$pred$obs,

predictor = bg$pred$Yes)

Acc_i <- confusionMatrix$overall[1]

Accuracy <- append(Accuracy, Acc_i)

K_i <- confusionMatrix$overall[2]

Kappa <- append(Kappa, K_i)

Auc_i <- roc$auc

Auc <- append(Auc, Auc_i)

nodesize_ <- append(nodesize_, nodesize)

sampsize_ <- append(sampsize_, sampsize)

dput("---------------")

dput(paste0("With nodesize= ", nodesize))

dput(paste0("With sampsize= ", sampsize))

dput(paste0("Accuracy: ",

round(confusionMatrix$overall[1], 3)))

print(roc$auc)

}

}

toc()

stopCluster(cpu)

# Aggregate Metrics ----

bagging_results = cbind(

data.frame(Accuracy, Kappa, Auc,

nodesize = nodesize_, sampsize=sampsize_))

#save(bagging_results, file="bagging_results.RData")

# Clear cache ----

rm(Acc_i, K_i, Auc_i, nodesize, roc, sampsize)

rm(Accuracy, Auc, Kappa, nodesize_, sampsize_)

-

Understand the behavior of the adjustment for the different parameters tuned through graphs.

- Also for bagging and random forest I have visualized the error of out-of-bag, to understand the walking as the interactions of the trees increased. Through this chart I have been able to reduce the complexity of my final model.

After choosing the best parameters, I have adjusted the final model, visualizing the confusion matrix and the most influential variables for each model.

Once the tree techniques have been adjusted, I have again adjusted the models with repeated cross validation this time, to compare the respective failure rate and the area below the ROC curve, through a box and mustache graph including as a reference model a logistic regression.

Once I found the winning model, I decided to create a stacked bar graph to get the most influential variables according to the different models. From these, I have created a new set training/test, which was then used to implement further ML techniques:

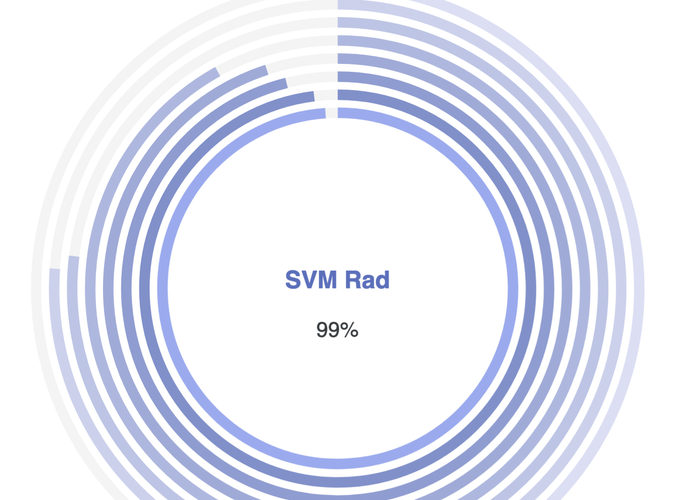

- Support Vector Machines, con kernel:

- Lineal

- Polynomial

- Radial

- Bagging del SVM Lineal

- Boosting

- Stacking de:

- Gradient Boosting Machine

- SVM Radial

- XGBoost