Web Scraping of Linkedin job positions

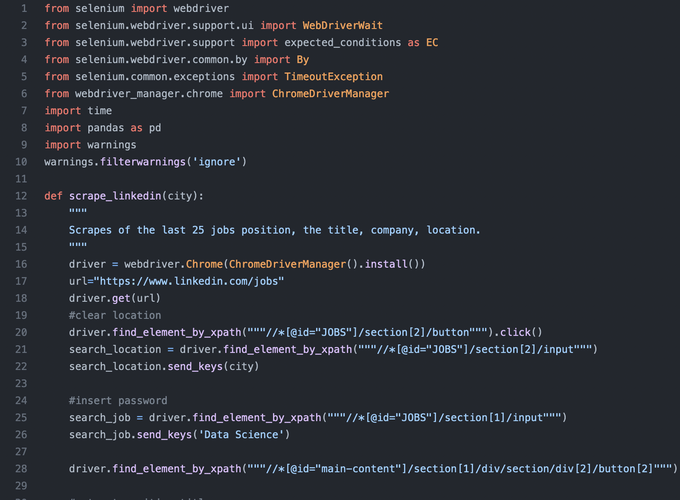

In this project I have applied some Python scripting knowledge to implement the web scraping techniques in order to extract information from the Linkedin job search page. For this step, selenium package was used.

The interest has been to create a set of data on this information:

- Work location

- Employment position

- Company

- Ad link

Once the data were collected, I have used this information to plot a word cloud of the most common words among these announces.

For the second step, I wanted to display the work positions on a map. However Linkedin does not provide information upon the position. Therefore, I had used the package BeautifulSoup in order to scrape from Google the addresses of the companies and then using a geocoding API, namely Nomatim, I had retrieved the latitude and longitude for each address to plot them using Leaflet.

Sentiment Analysis of Twitter Data

In this section, a Sentiment Analysis on Twitter was carried out about the current war in Ukraine from the point of view of Eastern Europe.

The interest has been to create a dataset that contains the information of the geolocalized tweets published from 18 to 26 March.